General

Revision A (baseline) Xillybus IP cores are available for download since 2010, and have been utilized in projects by hundreds of users worldwide.

Introduced in 2015, Revision B and XL Xillybus IP cores lift the bandwidth limits of the Revision A cores, in order to meet high-end application's increasing demand for data. Revision XXL was introduced in 2019. The new revisions (B, XL and XXL) offer a superset of features compared with the revision A, but are functionally equivalent when defined with the same attributes (with some possible performance improvements). These new revisions have also been used in dozens of projects, in particular commercial products that demand high performance.

The previous bandwidth limitations were partly due to the 32-bit internal data bus employed in Revision A cores, as well as the width of data bus connecting to the FPGA's PCIe block. The buses of Revision B/XL/XXL are wider (as detailed below), which allows the core to transport data faster, at the cost of a slight increase in logic consumption.

When possible, it's recommended to test the application logic with a revision A core first, and apply a revision B/XL/XXL core once the design is known to work well.

For Xilinx FPGAs, revision B, XL and XXL cores are available for Vivado only.

Revisions B, XL and XXL outlined

A revision B core binary is a drop-in replacement for a revision A. In other words, once a revision B core has been downloaded from the IP Core Factory, it may replace a revision A core with no other change of the logic design. Revision XL/XXL cores, on the other hand, are based upon a slightly different demo bundle, and can't be dropped into a revision-A/B-based design.

The following table summarizes the three currently available revisions and their merits.

| Revision | Related demo bundle | Bandwidth multiplier | Maximal bandwidth | Internal data width | Width of PCIe block bus | Allowed user interface data widths |

|---|---|---|---|---|---|---|

| Revision A | Baseline | x 1 (baseline) | 800 MB/s | 32 | 64(*) | 8, 16, 32 |

| Revision B | Baseline | x 2 | 1700 MB/s | 64 | 64 | 8, 16, 32, 64, 128, 256 |

| Revision XL | XL bundle(**) | x 4 | 3500 MB/s | 128 | 128 | 8, 16, 32, 64, 128, 256 |

| Revision XXL | XXL bundle(**) | x 8 | 6600 MB/s | 256 | 256 | 8, 16, 32, 64, 128, 256 |

(*) On Xilinx series-7 FPGAs. For other FPGAs, the connection with the PCIe block is 32 or 64 bits wide.

(**) Access to the XL/XXL bundle is given at request.

Obtaining a revision B / XL / XXL IP core

Revision B / XL / XXL cores are stable, and have been successfully integrated into commercial products. Access to these is granted only at request by email. There is however no particular requirement to obtain this access; this application process is merely for being in closer contact with high-end users.

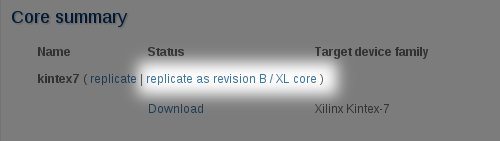

These cores are generated in the IP Core Factory by invoking the core summary of an existing revision A (baseline) core, and creating a replica of it, targeting a revision B/XL/XXL core (see example image below).

This option is not enabled by default. Rather, users who are interested in using revision B / XL / XXL IP cores should apply by email for having their IP Core Factory account set to enable replication to revision B / XL / XXL. In the request, the email address registered at the IP Core Factory should be stated, so the correct account can be found.

Using 64, 128 and 256 bits wide interfaces

While revision A cores allow application data widths of 8, 16 and 32 bits, revisions B and XL/XXL also allow 64, 128 and 256 bit wide interfaces. The main motivation is to make it possible to utilize the full bandwidth capacity with a single stream. However, as with revision A cores, it's also possible to divide the bandwidth using several streams (possibly 32 bits wide), so that the aggregate bandwidth utilizes the limit (usually with a 5-10% degradation). Data widths should be chosen as convenient.

The wider interfaces are allowed regardless of whether they help to increase the bandwidth capability for a given configuration. For example, the user application logic may connect with Xillybus' revision B / XL / XXL IP core with a 256 bit data bus even if the maximal theoretical data rate through these 256 bits exceeds the PCIe bus' capacity. These data widths are unrelated to the interface signals with the PCIe block.

When using these wider data interfaces, it's important to observe that since the natural data element of the PCIe bus is a 32-bits, some software safeguards that prevent erroneous use of streams do not apply when the data width goes above 32 bits. For example, any read() or write() call for a 64-bit stream must have a lengh that is a multiple of 8. Likewise, the seek positions for a seekable 64-bit wide stream must be a multiple of 8 to achieve any meaningful result. The software will however only enforce that it's a multiple of 4.

In conclusion, when the data width is above 32 bits, the application software is more responsible for performing I/O with a full word's granularity. There are no new granularity rules for wider streams, but unlike 32 and 16 bit streams, the driver will not necessarily enforce them.

Attaining maximal bandwidth

Transporting data at high data rates makes the CPU the bottleneck more often than most people believe. It's highly recommended to monitor the CPU consumption of the data handling process as it runs, and verify that it's has a considerable percentage of idle time.

For high performance data transport, the optimal data buffers size used by read() and/or write() calls usually range between 128kB and 1 MB. This affects the operating system's overhead as it processes system calls. When the buffers are smaller, this overhead may become very significant.

Effects related to context switches may become significant too, when the buffers are large, and when multiple streams are used to utilize a total aggregate bandwidth. The buffers' sizes compared with the CPU's cache size can play a role as well.

It's therefore suggested to experiment with these buffers' size to reach the optimal performance on a given system. The DMA buffers' size and count usually have little or no significance, as long as their total storage exceeds 10 ms worth of data at the expected data rate.

This page discusses best practices for high bandwidth applications.

ECRC trimming requirement

Xilinx' PCIe block must be configured to trim the ECRC if such is present on incoming TLPs. As ECRC is rarely used in practical systems, failing to meet this requirement is likely to result in a system that works perfectly well on almost all hosts anyhow, and fail completely on the fraction of hosts that employ ECRC. Baseline demo bundles with versions 1.2d and later, as well as all XL demo bundles have this issue configured properly.

Narrow streams

On revision A (baseline) cores, the use of streams with 8 or 16 bits of data width wastes effective data bandwidth with a factor of 4 and 2 respectively. This is not the case with revision B / XL / XXL cores: Thanks to a change in the internal data handling, several 8 and 16 bit streams can be used to utilize the full bandwidth together (with a typical degradation of 5-10% due to CPU overhead).

Hence revision B cores may be the preferred choice for applications requiring several narrow streams, even when the total bandwidth is below the relevant revision A core's limit.