TL;DR

This page says what should be obvious: USB BULK endpoints don't guarantee neither bandwidth nor latency. To make that point, test results on several USB 3.x controllers show how the data flow is halted randomly and inconsistently by these controllers, each with its own specific pattern. They are of course allowed to do so, and yet it might be surprising how each controller behaves differently.

The lesson to be learned is that if halting the application data stream randomly and arbitrarily breaks its functionality, significant buffering is required on the device (i.e. in hardware). This is important in particular in data acquisition applications.

It may be daunting to read the detailed description of the test settings as well as some USB protocol related discussions below, and neither is really necessary: All you need to know is that the peaks in the graphs shown on this page mean a stop of the data flow, and that the height of the peak is the duration of that halt. Any application that is based upon BULK endpoints, XillyUSB included, must be able to tolerate such data flow pauses.

The issues displayed on this page are not XillyUSB specific. The low-level packet exchange has been analyzed to ensure that the XillyUSB device behaves correctly around any odd situation, and XillyUSB's diagnostic tool was run during these tests to ensure that no low-level errors were detected by either side. In other words, XillyUSB did nothing to provoke these situations, so similar behavior is expected with any SuperSpeed device.

Overview

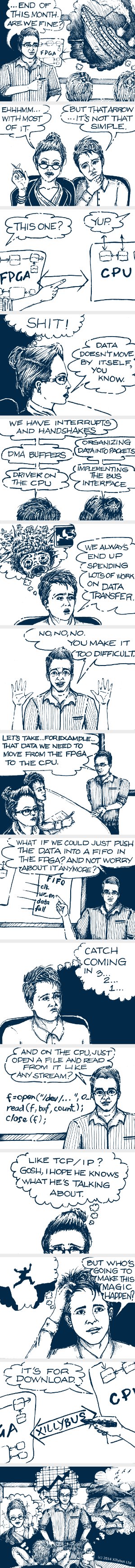

When developing an FPGA project that involves an interface with a PC computer, it’s usually important that it works with a variety of computers, and not just the one against which it’s developed. The possible differences between computers is often overlooked, relying on the fact that the interface is standard, and hence a similar behavior is expected. This is indeed fairly correct when the interface gives the FPGA control over the data flow -- in particular when the FPGA has DMA master access to the processor’s main memory bus, as facilitated by the PCIe bus (or other bus interfaces on embedded / FPGA combo devices). This may however be fatally wrong for a USB-based transport.

Certain applications, data acquisition in particular, produce data at a fixed rate. Halting the data flow temporarily is not an option, and hence the data must be consumed at the rate it’s generated. A common solution is to store the data directly in the computer’s main memory by virtue of DMA, for example by using Xillybus’ IP core for PCIe. This typically works with quite small buffering on the FPGA side, because the computer’s bus arbiter grants access for the DMA transfers quickly enough. This can be relied upon across different computer hardware when the requested DMA bandwidth is significantly smaller than the computer’s full bus capacity.

By contrast, when the interface is based upon USB, any device functions as a slave, and has no immediate control on when the data exchange occurs. The data exchange is initiated by the USB host controller. The USB protocol (for all versions and data rates) considers the BULK protocol a transport for data with no constraint on how soon the data transactions are made, nor on how well the physical bandwidth is utilized. In other words, a USB host controller may wait an arbitrarily long period of time before processing a properly queued chunk of data for transmission, even if the link is completely idle. It may also do this repeatedly, resulting in an average data bandwidth that is far lower than allowed for by the underlying physical layer. Even though such behavior results in poor performance, it doesn’t violate the USB spec, and neither does it prevent a device from passing certification tests.

For a data acquisition or playback application, this means that significant buffer RAM is required on the FPGA side, possibly using external memory, in order to prevent data loss or underruns. The size of this RAM depends on the data rate in relation to the behavior of the data channel, in particular its tendency to arbitrarily pause communication. As there’s no datasheet specifications regarding such pauses, it’s common engineering practice to characterize the behavior based upon the hardware and software at hand. The primary goal of this page is to demonstrate why this can turn out fundamentally wrong, even though one may get away with it for low-bandwidth applications.

This is relevant to applications based upon XillyUSB, which is based upon BULK USB endpoints. Why Isochronous endpoints aren’t used by XillyUSB is explained on a separate page.

Methodology

Bandwidth performance tests in both directions were performed on five USB 3.x host controllers, which were selected arbitrarily. Data was transmitted between the host and the FPGA using XillyUSB at the highest possible rate for each setting. Measurements were taken on the average data rate as well as time differences between each data payload packet on the USB bus.

The tests were anecdotal, and by no means comprehensive nor exhaustive: Their purpose was to demonstrate the variety of behaviors rather than to fully describe and understand each. Doing so would be pointless, as it’s impossible to foresee the behavior of a yet untested USB controller.

Even though the tests were all carried out on using XillyUSB, the results are dominated by the behavior of the host USB controller. Similar results are therefore expected when using other means for implementing the USB 3.0 device.

The operating system was Windows 10 for all tests outlined here. Linux v5.13 only support the two chipsets by Intel and Renesas listed below, and produced very similar results compared with Windows 10 for these. The other three chipsets have dedicated drivers for Windows only, which apparently resolve diversions from the xHCI specification, and possibly silicon bugs.

It’s important to note that none of the results shown below display any malfunction nor diversion from the USB specification. Even the most unfortunate phenomena displayed below are within the allowed behavior of a USB host controller, even though they indicate ungraceful implementations.

Average data rate performance

To begin, some basic average data rates measurements were made by transmitting data on a single XillyUSB stream, noting the time vs. amount of data transmitted. Running several streams in parallel may result in a different aggregated result, for better or worse.

Even though not directly related, each USB host controller’s setting and behavior regarding low-power states were noted, with the help of the showdiagnostics tool. The detailed meaning of PORT_U2_TIMEOUT is explained on this page, however it boils down to that unless it’s disabled, one would expect the USB host controller to attempt to power down the link when it becomes idle (but some didn't). Whether such attempts were made is given in the “Host power down” column in the table below. Note that like all default XillyUSB IP cores (as of August 2021), the device under test refused to all attempts to power down the link, so this didn’t have any practical significance.

The three devices by ASMedia and Via Labs require a dedicated Windows driver to support USB 3.0 (even though ASM3142 works fairly well with Linux, when the number of host-to-FPGA streams carrying traffic at a given time is limited to one). Renesas’ device works fully and seemingly the same with and without its dedicated driver. Intel’s host controller doesn’t require a dedicated driver.

| Vendor | Chipset | Up BW | Down BW | PORT_U2_ TIMEOUT(****) | Host power down |

|---|---|---|---|---|---|

| Intel | B150 (motherboard Chipset) | 460 MB/s | 475 MB/s | 10.752 ms | Yes |

| Renesas | uPD720202 | 386 MB/s | 365-376 MB/s(*) | 10.752 ms(**) | Yes |

| ASMedia | ASM1142 | 360 MB/s | 295 MB/s | Disabled | No |

| ASMedia | ASM3142(***) | 469 MB/s | 471 MB/s | 10.752 ms | No |

| Via Labs | VL805 | 392 MB/s | 289 MB/s | 32.512 ms | No |

(*) Bandwidth measurements vary within the given range.

(**) The PORT_U2_TIMEOUT with driver installed is 10.24 ms.

(***) The device reports itself with misleading PCI ID 1b21:2142. As a result, it's often referred to as ASM2142, a chipset that apparently doesn't exist.

(****) As set in the U2 Inactivity Timeout Link Management Packet (LMP) sent from the USB host controller to the device.

XillyUSB and USB transfers

Device drivers utilize the USB bus for exchange of data by issuing a request for a USB transfer to the USB host controller (xHCI) driver. In Windows, this request is done with an IRP, and in Linux it’s called a URB. The principle is however the same: The driver fills a data structure with values that reflect the required data transfer, and allocates a buffer for containing the data. Among others, this request states the number of bytes for transmission. It then passes this data structure to the xHCI driver. A successful fulfillment of a BULK OUT request always means that the requested number of bytes were sent, however a BULK IN request may be successfully fulfilled with less.

Under load, transfer requests for payload data made by XillyUSB IP core's driver are issued for 64 kiB. This size was chosen with low latency in mind (as the theoretical minimum for transferring this data is 128 μs) but also taking into consideration that the driver can have only a handful of such requests outstanding, and needs to requeue them at the rate they are fulfilled.

BULK OUT transfer requests may be for less than 64 kiB when the host driver has less than so to send, however this was never the case in the tests described below, as the streams were kept constantly busy.

Payload data is transmitted on the physical channel by virtue of packets of data packets, which are up to 1024 bytes long on USB 3.0. The USB 3.0 protocol allows transmission of bursts of data packets on the physical link: The device continuously announces how many packets each endpoint can handle, which allows the host controller to initiate back-to-back transmissions of packets. The protocol allows the device to update its capacity to handle packet transmissions on a burst in progress, making it possible to keep it running indefinitely. The host controller may (and usually does) stop bursts even if the conditions allow its continuation, for the sake of allowing other endpoints access to the bus, or for no apparent reason.

There is no necessary connection between the size of transfer requests and the length of bursts. The USB host controller may split a transfer into several bursts, and may likewise join several transfers into one burst (under certain conditions).

In order to allow for bursts, the XillyUSB core under test maintains buffers for 8 data payload packets for the single BULK IN endpoint it utilizes, and for 4 data packets for each BULK OUT endpoint that relates to a user application stream. This is the setting for all XillyUSB IP cores as of August 2021 (and is not likely to change in the future).

The XillyUSB IP core reports its readiness for bursts according to the number of filled buffers for the BULK IN endpoint at any time, and likewise the number of vacant buffers for the BULK OUT endpoints. Since the logic that mimics the FPGA application fills the FPGA-to-host streams continuously, and drains the streams in the opposite direction as well, the USB host controller is effectively always granted to continue bursts indefinitely. In other words, the device always gives a green light to transmission on all its endpoints, and when the host controller pauses, it’s never on the device’s request.

FPGA to host tests (single BULK IN endpoint)

The tests in this direction were all done with a single BULK IN endpoint, because XillyUSB uses a single endpoint for all its communication towards the host (except for enumeration and similar control data): Payload data for all FPGA to host application streams, as well as XillyUSB-specific protocol data, are multiplexed into this endpoint in order to mitigate the adverse effects of using separate endpoints for each, as demonstrated below for BULK OUT endpoints.

It important to note that if multiple BULK IN endpoints are used by a non-XillyUSB device, significant additional latencies should be expected, on top of what is shown here.

The setup for this test was as follows: The FIFO interface of a FPGA-to-host XillyUSB stream was fed with a free-running counter as data, which incremented on each FPGA clock cycle (even when the word wasn’t consumed). The bogus FIFO’s empty signal was driven constantly low. Hence the XillyUSB IP core faced what appeared to be a FIFO which never became empty, with a data word that reflects when it was fetched.

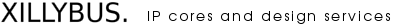

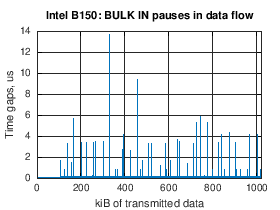

The graphs below for this test show the time difference between each data word and the one before it, so that pauses in the data flow appear as spikes. These pauses are a direct result of XillyUSB’s own buffers becoming full, which is in turn a result of the USB host controller not requesting data from the device, even though the device constantly announced that data is available.

These are the graphs for Intel’s and Renesas’ controllers, which represent the pattern for the others as well (click to enlarge):

Most of the time, small gaps of less than 1 μs are found between segments of 1024 bytes (one USB 3.0 payload packet), and occasionally there are halts of less than 30 μs. Since these larger occasional gaps appear on all host controllers, they seem to be related to the operating system. They exist on Linux as well, with roughly the same size of the gaps, but with different patterns.

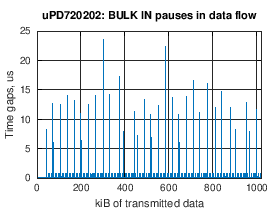

Two controllers behaved exceptionally, however. This is the graph for ASM1142, which made pauses on the data flow of up to ~ 260 μs with fixed intervals (click to enlarge):

The reason for these pauses is not known, but given their magnitude, it seems like some kind of watchdog timer gets the data flow unstuck repeatedly. These gaps occur after 64 kiB of data, which most likely coincides with the size of the USB transfers that are initiated by the XillyUSB host driver. The size of these gaps reduces to about 30-60 μs after a few MB of data (not shown above), or else the bandwidth performance would have turned out significantly worse than listed above.

Once again, as unflattering as this performance is, it’s still within the USB specification.

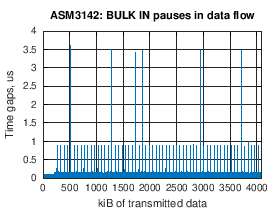

By contrast, a later USB controller by the same company, ASM3142, displayed exceptionally small gaps (click to enlarge):

Host to FPGA tests (multiple BULK OUT endpoints)

The XillyUSB IP core uses a separate BULK OUT endpoint for each host-to-FPGA stream it presents to the user application. It was hence tested with six such streams, by virtue of six userspace processes running a program which writes data to its designated stream at the the maximal rate possible. On the FPGA side, the IP core was presented with a bogus FIFO that held its “full” signal low. Accordingly, this setup kept all six streams in a state where data for transmission was always available at the host, and the data sink on the FPGA was always ready to receive the data.

The arriving data itself was discarded, however logic on the FPGA logged the time of arrival of each USB payload packet: The value of a free-running counter for each 1024th byte that arrived was registered and returned to the host by virtue of a XillyUSB application stream, which collected these time events for all six examined streams.

Similar to the graphs for BULK IN, the graphs below show the difference in time between the arrival of USB payload packets. Likewise, spikes on these graphs are a result of pauses in the data flow.

As six BULK OUT endpoint are examined, there are two different types of pauses to consider:

- Complete pauses in all BULK OUT data flow. This is indicated by looking at the aggregation of data packet arrival events for all six streams: The time gaps between these events are a result of pauses BULK OUT transmissions in general.

- Pauses of a specific BULK OUT endpoint. The data packet arrival events of a specific endpoint are examined for this purpose. The time gaps indicate a pause on that endpoint, which is a result of either serving another endpoint, or a complete pause.

Recall that USB 3.0 allows bursts of indefinite length to each endpoint: The device can announce how many packets it may accept at any given time, and updates this information as the burst continues. If it keeps allowing more packets, the USB host controller is allowed to continue to send data packets, even if other BULK OUT endpoints are eligible for bus bandwidth. As mentioned earlier, there is no time limit for granting bus bandwidth to an eligible BULK endpoint.

The programs were started one after the other on the host computer by virtue of a script, so traffic on endpoints was kicked off while other endpoints were already in action. This seemingly unimportant technical detail turned out to produce the worst pauses, as is shown next.

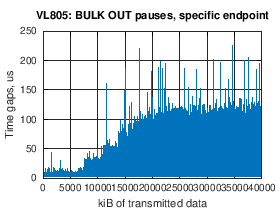

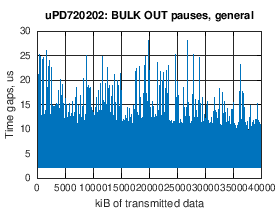

For a starter, this is the graph showing the gaps for one specific endpoint (the first kicked off), on VL805 (click to enlarge):

The gap maginutes escalate in five steps, one step for each additional XillyUSB stream that the host opens and pushes data to. Clearly, this device sent short bursts of data to each endpoint, resulting in relatively short pauses for each endpoint.

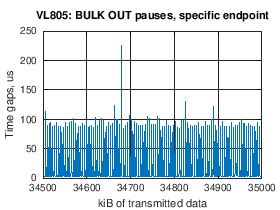

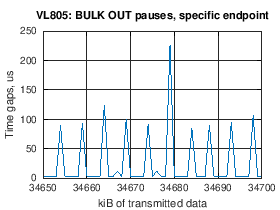

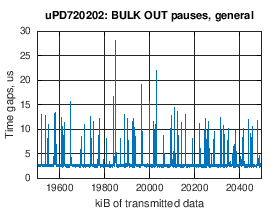

Zooming in on the same graph (click to enlarge):

The bursts for each endpoint are varying, about 4 kiB each. The other tested devices had different patterns, but similar short bursts were common.

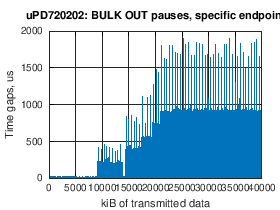

By contrast, consider the same graph for Renesas (click to enlarge):

These extremely long gaps of up to 2000 μs are the result of long bursts for each endpoint. This can be seen by looking at a zoom-in of the same graph (click to enlarge):

Recall that the XillyUSB driver issues transfer requests of 64 kiB each. Evidently, Renesas’ USB host controller doesn’t switch to another BULK OUT endpoint until the transfer is finished, and hence transmits the entire transfer in a single burst. From the graph’s raw data, the time each such 64 kiB burst took was ~ 172 μs (as opposed to the theoretical minimum of 128 μs). Hence a round-robin sequence between the six BULK OUT endpoints would yield a gap of 5 x 172 μs = 860 μs, which is the gap level appearing most of the time in the two graphs above. The higher peaks indicate that the USB controller doesn’t always make round-robin sequences, and therefore causes longer and shorter gaps randomly.

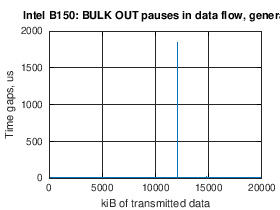

To get convinced that this is indeed just an issue of long bursts for each endpoint, consider the following graph, showing complete pauses, i.e. looking at time differences between the data packets of any BULK OUT endpoint (graph zoomed in at an arbitrary point to the right, click to enlarge).

The gaps here are smaller than 30 μs, indicating that the controller actually keeps running. It’s only when looking at a specific endpoint that the gaps get large.

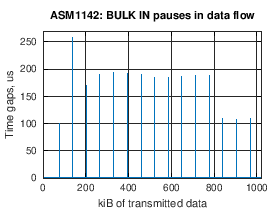

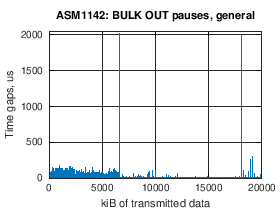

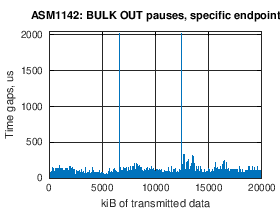

However there was another, unrelated reason for pauses, also of about 2000 μs. But during these pauses, the link towards the FPGA was completely idle. This is the graph of pauses for all BULK OUT traffic for ASM1142 (click to enlarge):

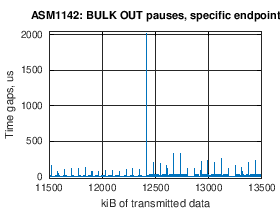

The position of this gap was at the point where a new stream started pushing data. In the graphs, this is apparent by the spike being at the position of the step caused by the new endpoint joining in. These are the graphs for the same test, plotted for the BULK OUT endpoint that was kicked off first (click to enlarge):

The second spike appears later on the non-specific ("general") graph, compared with the specific endpoint, because the X axis of the non-specific graph includes transmitted data from two endpoints in the segment between the two spikes.

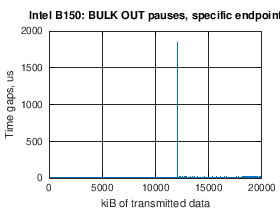

A similar gap also occurred with the Intel controller (click to enlarge):

These large gaps didn’t appear consistently in the same positions, and sometimes not at all, so they are not necessarily related directly to this USB controller or another, even though they seemed to appear more often on some controllers and not at all on others.

It’s not clear why these large gaps occurred exactly as a new BULK OUT endpoint began transmitting data. It could be related to the xHCI driver, or even possibly to the intense memory allocation that goes along with starting a new XillyUSB stream. The exact reason is not necessarily important to find, since the point is that pauses come out of nowhere.

Conclusion

USB BULK endpoints were designed to carry data that isn't sensitive to time delays, and consequently the USB protocol doesn't say anything about latency or bandwidth utilization regarding these. And indeed, SuperSpeed USB controllers take this liberty to different directions. Even though their designs seem to have attempted to perform in a favorable way, it appears like different goals have been set for each such design. And at times, controllers with obvious practical flaws are found in the market, as they remain within the protocol's requirements.

As this page merely covers a handful of USB controllers, there are clearly other phenomena that aren't covered here in existing and future USB controllers. Any device and its host software must tolerate bandwidth outages significantly larger than those shown here in order to ensure proper functionality with any computer hardware it may encounter.