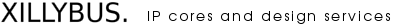

Introduction

XillyUSB is the USB 3.0 counterpart for the well-established Xillybus for PCIe IP core, and can function as a drop-in replacement in several applications, when the relatively limited data rate of USB 3.0 is sufficient.

There is however one significant difference between the PCIe IP core and XillyUSB, which is a direct result of the underlying transport: XillyUSB, which is based upon BULK endpoints, can not guarantee how quickly data is transferred from and to its internal buffers, and as a result, the data flow may stall occasionally for short periods of time in an unpredictable manner. A separate web page shows some anecdotal yet quantitative test results on this matter.

For data acquisition and playback applications, this makes a significant impact on the application logic’s design: While an application can rely on quite shallow FIFOs between itself and the PCIe-based Xillybus IP core, significantly deeper buffer is required with XillyUSB. The difference stems from the practical guarantee given by the PCIe bus to grant data transport access within a few microseconds, as opposed to the occasional data stalls caused by the USB BULK endpoints.

The question is hence: Would it be better had XillyUSB used Isochronous endpoints instead?

BULK vs. Isochronous endpoints

The USB’s protocol layer offers two endpoint types for data transfers: BULK and Isochronous. There are Interrupt endpoints as well, however with a tiny data capacity.

The USB protocol guarantees that BULK transfers pass from end to end without errors or data loss by virtue of integrity checks and retransmissions without software intervention, however doesn’t put any restriction on how soon the data will arrive. Data that is sent over BULK endpoints is considered as such that tolerates an indefinite delay or slow transfer rate.

Accordingly, the USB host controller may delay the initiation of a BULK data transfer for an arbitrarily long time, even if the conditions for performing it are set and the link is completely idle. Also, it may prioritize other endpoints’ transfers (of any type) over any BULK endpoint’s transfer. Even if several BULK endpoints have outstanding requests for data transfers, a host controller is allowed to serve one BULK endpoint indefinitely, effectively causing bandwidth starvation to the others (although the xHCI specification vaguely encourages a fair division of bandwidth between eligible endpoints). Even though such behavior may cause poor practical results, it’s within the protocol’s boundaries and doesn’t prevent a USB host controller from passing a compliance test.

For applications that require a known data rate and limited delay, the USB protocol provides Isochronous endpoints. These endpoints are allocated a fixed amount of data payload packets on each 125 μs time frame (a microframe), hence ensuring a certain amount of data transport within this time period. However Isochronous endpoints are datagram-oriented, offering no retransmission if a packet arrives with errors: A packet that fails the integrity check by its receiver is silently discarded. The protocol offers a means for detecting that a packet was lost, but if a retransmission is required, it must be done by software.

The applications that were envisioned for use with Isochronous endpoints were domestic USB audio cards and webcams, which require a low latency. What is typical to these applications is that if data was lost, a retransmission is pointless, as the data will arrive too late.

Unfortunately, none of the USB protocol versions address the situation where error-free data transmission is required with low latency. In a way it’s understandable, because in theory a packet may be retransmitted forever, so a latency limit can not be set for a protocol that includes retransmission. It’s nevertheless regrettable that there is no limit for bandwidth starvation of a BULK endpoint, or a limit for how long the data link may be idle in the presence of a BULK endpoint that is eligible for bandwidth.

The absence of such limits allow certification of USB host controllers that get momentarily stuck due to poor design or hardware bugs. Even if such behavior is harmless to some applications (e.g. a disk-on-key) it may cause visible functional degradation to other mainstream devices (e.g. a Gigabit Ethernet adapter, which is forced to drop packets because of a USB endpoint stalling shortly).

XillyUSB and BULK endpoints

XillyUSB, like the PCIe-based Xillybus IP core, is designed to present an error-free pipe of data, with no losses between the FPGA and the host. The natural choice of USB transport was hence BULK endpoints.

It’s nevertheless reasonable to ask why Isochronous endpoints are not employed for links that require a certain bandwidth and/or limited latency, in particular high-bandwidth data acquisition or playback applications, where the maximal latency must be compensated for with deep RAM buffers on the FPGA.

So would an Isochronous endpoint eliminate the need for these deep RAM buffers? Not if data packet losses are taken into consideration. If XillyUSB was based upon an Isochronous endpoint, it would have to facilitate a data retransmission protocol implemented partly on the FPGA and partly in the software driver on the host. This is unlike the BULK endpoint’ retransmission mechanism, which is implemented at the link layer, in the hardware of both sides.

But retransmission requires RAM buffers: The FPGA has to save all packets it sends until they’re acknowledged. And for packets received by the FPGA, maintaining a buffer is required in order to ensure a fixed data rate, so that if a packet is lost, there’s enough data to supply to the application logic until the retransmission is completed.

The size of these RAM buffers needs to compensate for a retransmission that involves software running on the host. The latency of this software response includes the delay for allocating CPU to both the host controller’s driver as well as Xillybus’ driver. Further on, there’s the delay of submitting acknowledges and retransmission requests.

The bottom line is hence that if data loss is unacceptable, Isochronous endpoints don’t eliminate the need for deep RAM buffers on the FPGA, nor do they supply a limit for the maximal latency of data. On the other hand, they require a rigid bandwidth allocation at the enumeration stage (which may fail) and a retransmission protocol implemented in software.

This is not to say that a low-latency data stream, based upon Isochronous endpoints, can’t be set up and work on a specific setting: Typically, the link is practically error free, so if neither the USB host controller’s hardware nor software driver drops packets due to bugs or poor design, data can flow from one end to another with a limited latency of 125 μs. But given that any application that is based upon Isochronous endpoints must tolerate packet losses, it should not be a surprise if some USB controllers drop packets occasionally.

Summary

As XillyUSB (and the PCIe-based Xillybus IP core) is designed to present reliable and error-free data transmission, relying on that an Isochronous endpoint will not drop packets is out of the question. Given the need to implement a retransmission in software, it therefore follows that Isochronous endpoints have no advantage over BULK endpoints for XillyUSB’s purposes, as deep RAM buffers are required on the FPGA either way.

Of course, this relates only to applications that can’t tolerate brief halts in the data flow. If the application logic on the FPGA can adapt its pace to the data flow (by preventing the intermediate FIFO from overflowing or tolerating that it’s empty, depending on the direction) there is no problem to begin with.

It’s worth to note that the depth of the RAM buffers is proportional to the application stream’s data rate. Hence there has been no problem to allocate this RAM for applications that were based upon USB 2.0 and earlier, even when fixed data rates were applied to plain BULK endpoints. It’s only when applications make good use USB 3.0’s physical bandwidth that the buffer depth becomes problematic for implementation on the FPGA’s own RAM resources.

It’s also worth mentioning that even though this discussion was made for XillyUSB, it applies likewise to any other USB-based device, which requires a fixed data rate and can’t tolerate halts in the data flow.